模型上下文协议(MCP)可以为大语言模型代理赋能,提供数百种工具来解决现实世界的任务。但我们如何让这些工具发挥最大效用呢?

在本文中,我们将介绍在各种代理型 AI 系统中提升性能的最有效技术。

我们首先介绍如何:

- 构建并测试你的工具原型

- 创建并运行针对你的工具的全面评估,与智能体协作

- 与像 Claude Code 这样的智能体协作,自动提升你的工具性能

最后,我们总结了在实践过程中发现的编写高质量工具的关键原则:

- 选择合适的工具进行实现(以及哪些不需要实现)

- 为工具命名空间以定义功能的明确边界

- 从工具返回有意义的上下文给代理

- 优化工具响应以提高令牌效率

- 对工具描述和规范进行提示工程

构建评估可以让你系统地衡量你的工具的性能。你可以使用 Claude Code 根据这个评估自动优化你的工具。

什么是工具?

在计算机领域,确定性系统在给定相同输入的情况下每次都会产生相同的输出,而非确定性系统——比如智能体——即使在相同的初始条件下也可能生成不同的响应。

当我们以传统方式编写软件时,我们是在确定性系统之间建立契约。例如,像 getWeather(“NYC”) 这样的函数调用,每次被调用时都会以完全相同的方式获取纽约市的天气。

工具是一种新型软件,体现了确定性系统与非确定性智能体之间的契约。当用户问“我今天需要带伞吗?”时,智能体可能会调用天气工具、根据通用知识作答,甚至先询问关于地点的澄清问题。有时,智能体可能会产生幻觉,甚至无法理解如何使用工具。

这意味着在为智能体编写软件时,我们必须从根本上重新思考方法:与为其他开发者或系统编写函数和 API 不同,我们需要专门为智能体设计工具和 MCP 服务器。

我们的目标是通过工具,让智能体能够在更广泛的任务领域中有效发挥作用,采用多种成功策略来解决问题。幸运的是,根据我们的经验,对智能体最“易用”的工具,往往也非常容易被人类理解和掌握。

如何编写工具

在本节中,我们将介绍如何与智能体协作,共同编写和改进你为他们提供的工具。首先,快速搭建你的工具原型并在本地进行测试。接下来,进行全面评估以衡量后续的变化。与智能体协同工作,你可以反复评估和改进你的工具,直到智能体在实际任务中表现出色。

构建原型

It can be difficult to anticipate which tools agents will find ergonomic and which tools they won’t without getting hands-on yourself. Start by standing up a quick prototype of your tools. If you’re using Claude Code to write your tools (potentially in one-shot), it helps to give Claude documentation for any software libraries, APIs, or SDKs (including potentially the MCP SDK) your tools will rely on. LLM-friendly documentation can commonly be found in flat llms.txt files on official documentation sites (here’s our API’s).

在没有亲自实践的情况下,很难预判哪些工具对智能体来说易于使用,哪些则不然。首先,快速搭建你的工具原型。如果你使用 Claude Code 来编写工具(可能是一次性完成),建议为 Claude 提供任何你的工具将依赖的软件库、API 或 SDK(包括可能的 MCP SDK)的文档。适合大语言模型的文档通常可以在官方文档网站的扁平 llms.txt 文件中找到(这是我们的 API 文档)。

Wrapping your tools in a local MCP server or Desktop extension (DXT) will allow you to connect and test your tools in Claude Code or the Claude Desktop app.

将你的工具封装在本地 MCP 服务器或桌面扩展(DXT)中,可以让你在 Claude Code 或 Claude Desktop 应用中连接并测试你的工具。

To connect your local MCP server to Claude Code, run claude mcp add <name> <command> [args...].

要将本地 MCP 服务器连接到 Claude Code,请运行 claude mcp add <name> <command> [args...] 。

To connect your local MCP server or DXT to the Claude Desktop app, navigate to Settings > Developer or Settings > Extensions, respectively.

要将本地 MCP 服务器或 DXT 连接到 Claude 桌面应用,请分别导航至 Settings > Developer 或 Settings > Extensions 。

Tools can also be passed directly into Anthropic API calls for programmatic testing.

工具也可以直接传递到 Anthropic API 调用中,以进行程序化测试。

Test the tools yourself to identify any rough edges. Collect feedback from your users to build an intuition around the use-cases and prompts you expect your tools to enable.

亲自测试这些工具,以发现任何不完善之处。收集用户反馈,建立对你期望工具能够实现的用例和提示的直觉。

运行评估

Next, you need to measure how well Claude uses your tools by running an evaluation. Start by generating lots of evaluation tasks, grounded in real world uses. We recommend collaborating with an agent to help analyze your results and determine how to improve your tools. See this process end-to-end in our tool evaluation cookbook.

接下来,你需要通过运行评估来衡量 Claude 使用你的工具的效果。首先,生成大量基于真实世界应用的评估任务。我们建议与智能体协作,帮助分析你的结果并确定如何改进你的工具。完整流程请参见我们的工具评估手册。

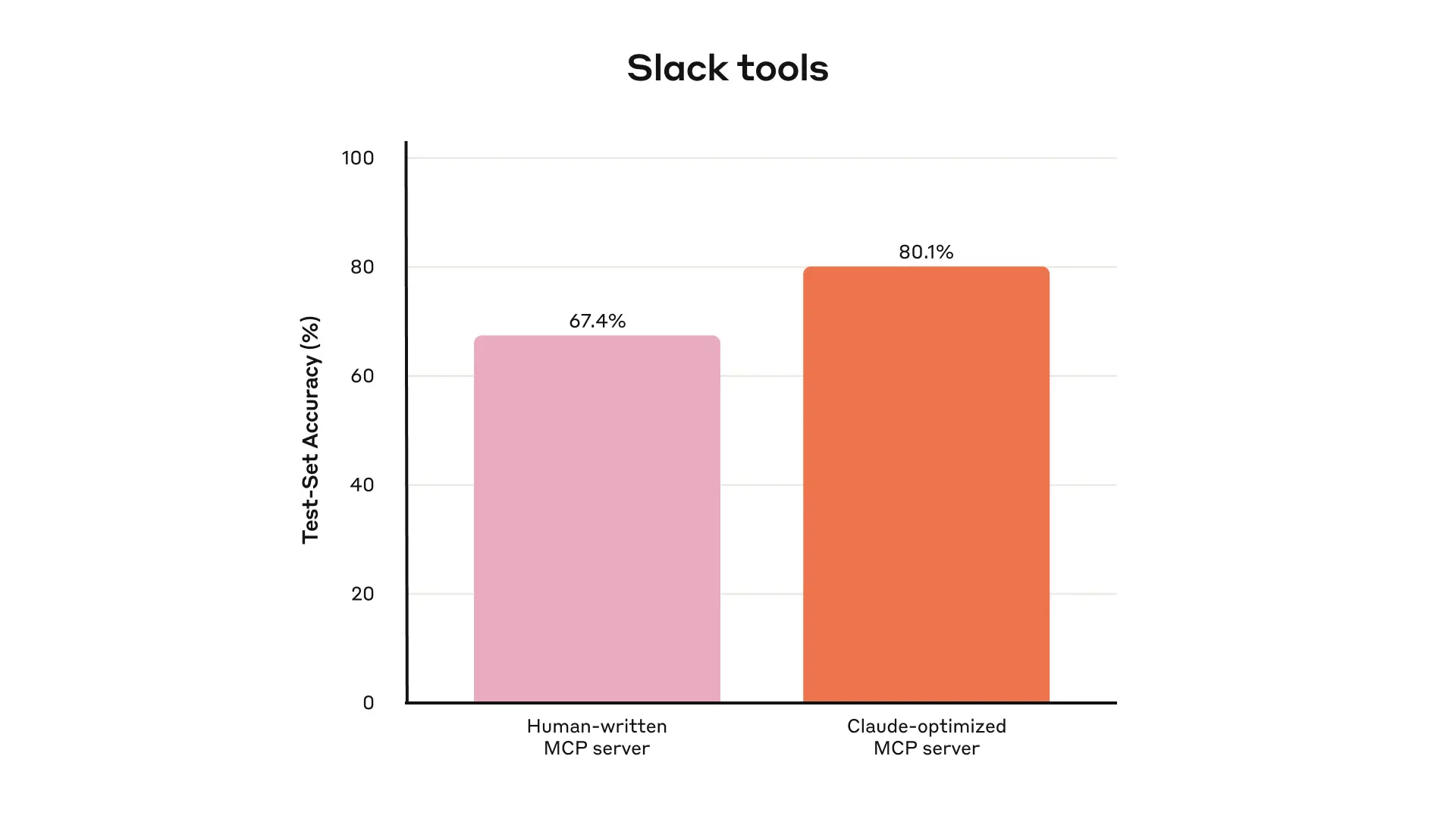

Held-out test set performance of our internal Slack tools 我们内部 Slack 工具的保留测试集表现

Generating evaluation tasks

生成评估任务

With your early prototype, Claude Code can quickly explore your tools and create dozens of prompt and response pairs. Prompts should be inspired by real-world uses and be based on realistic data sources and services (for example, internal knowledge bases and microservices). We recommend you avoid overly simplistic or superficial “sandbox” environments that don’t stress-test your tools with sufficient complexity. Strong evaluation tasks might require multiple tool calls—potentially dozens.

使用您的早期原型,Claude Code 可以快速探索您的工具,并创建数十组提示和响应对。提示应当以真实世界的使用场景为灵感,并基于真实的数据源和服务(例如,内部知识库和微服务)。我们建议您避免过于简单或表面的“沙盒”环境,这类环境无法用足够的复杂性对您的工具进行压力测试。高质量的评估任务可能需要多次调用工具——甚至可能多达数十次。

Here are some examples of strong tasks:

以下是一些高质量任务的示例:

- Schedule a meeting with Jane next week to discuss our latest Acme Corp project. Attach the notes from our last project planning meeting and reserve a conference room.

安排下周与 Jane 开会,讨论我们最新的 Acme Corp 项目。请附上上次项目规划会议的笔记,并预订一个会议室。 - Customer ID 9182 reported that they were charged three times for a single purchase attempt. Find all relevant log entries and determine if any other customers were affected by the same issue.

客户 ID 9182 报告称在一次购买尝试中被重复扣费三次。请查找所有相关日志记录,并确定是否有其他客户也受到同样问题的影响。 - Customer Sarah Chen just submitted a cancellation request. Prepare a retention offer. Determine: (1) why they’re leaving, (2) what retention offer would be most compelling, and (3) any risk factors we should be aware of before making an offer.

客户 Sarah Chen 刚刚提交了取消请求。请准备一个挽留优惠。请确定:(1)她离开的原因,(2)最有吸引力的挽留优惠是什么,以及(3)在提出优惠前我们需要注意的任何风险因素。

And here are some weaker tasks:

以下是一些较弱的任务:

- Schedule a meeting with jane@acme.corp next week.

安排在下周与 jane@acme.corp 召开会议。 - Search the payment logs for

purchase_completeandcustomer_id=9182.

在支付日志中搜索purchase_complete和customer_id=9182。 - Find the cancellation request by Customer ID 45892.

查找客户编号为 45892 的取消请求。

Each evaluation prompt should be paired with a verifiable response or outcome. Your verifier can be as simple as an exact string comparison between ground truth and sampled responses, or as advanced as enlisting Claude to judge the response. Avoid overly strict verifiers that reject correct responses due to spurious differences like formatting, punctuation, or valid alternative phrasings.

每个评估提示都应与可验证的响应或结果配对。你的验证器可以像对比标准答案和采样响应的精确字符串一样简单,也可以像让 Claude 判断响应那样高级。避免过于严格的验证器,因为它们可能因格式、标点或有效的替代表述等偶然差异而拒绝正确的响应。

For each prompt-response pair, you can optionally also specify the tools you expect an agent to call in solving the task, to measure whether or not agents are successful in grasping each tool’s purpose during evaluation. However, because there might be multiple valid paths to solving tasks correctly, try to avoid overspecifying or overfitting to strategies.

对于每个提示-响应对,你还可以选择性地指定你期望智能体在解决任务时调用的工具,以衡量智能体在评估过程中是否成功理解了每个工具的用途。然而,由于正确解决任务可能有多种有效路径,请尽量避免对策略进行过度指定或过拟合。

Running the evaluation 运行评估

We recommend running your evaluation programmatically with direct LLM API calls. Use simple agentic loops (while -loops wrapping alternating LLM API and tool calls): one loop for each evaluation task. Each evaluation agent should be given a single task prompt and your tools.

我们建议通过直接调用 LLM API 以编程方式运行你的评估。使用简单的智能体循环( while -loops,交替包裹 LLM API 和工具调用):每个评估任务一个循环。每个评估智能体应被分配一个任务提示和你的工具。

In your evaluation agents’ system prompts, we recommend instructing agents to output not just structured response blocks (for verification), but also reasoning and feedback blocks. Instructing agents to output these before tool call and response blocks may increase LLMs’ effective intelligence by triggering chain-of-thought (CoT) behaviors.

在你的评估代理系统提示中,我们建议指示代理不仅输出结构化响应块(用于核实),还要输出推理和反馈块。指示代理在工具调用和响应块之前输出这些内容,可能会通过触发链式思维(CoT)行为,提高大语言模型的有效智能。

If you’re running your evaluation with Claude, you can turn on interleaved thinking for similar functionality “off-the-shelf”. This will help you probe why agents do or don’t call certain tools and highlight specific areas of improvement in tool descriptions and specs.

如果你在使用 Claude 进行评估,可以直接开启交错思考功能,实现类似的效果。这将帮助你探查代理为何会或不会调用某些工具,并突出工具描述和规范中需要改进的具体方面。

As well as top-level accuracy, we recommend collecting other metrics like the total runtime of individual tool calls and tasks, the total number of tool calls, the total token consumption, and tool errors. Tracking tool calls can help reveal common workflows that agents pursue and offer some opportunities for tools to consolidate.

除了顶层准确率外,我们还建议收集其他指标,如单个工具调用和任务的总运行时间、工具调用总次数、总 Token 消耗量以及工具错误。跟踪工具调用可以帮助揭示代理常用的工作流程,并为工具整合提供一些机会。

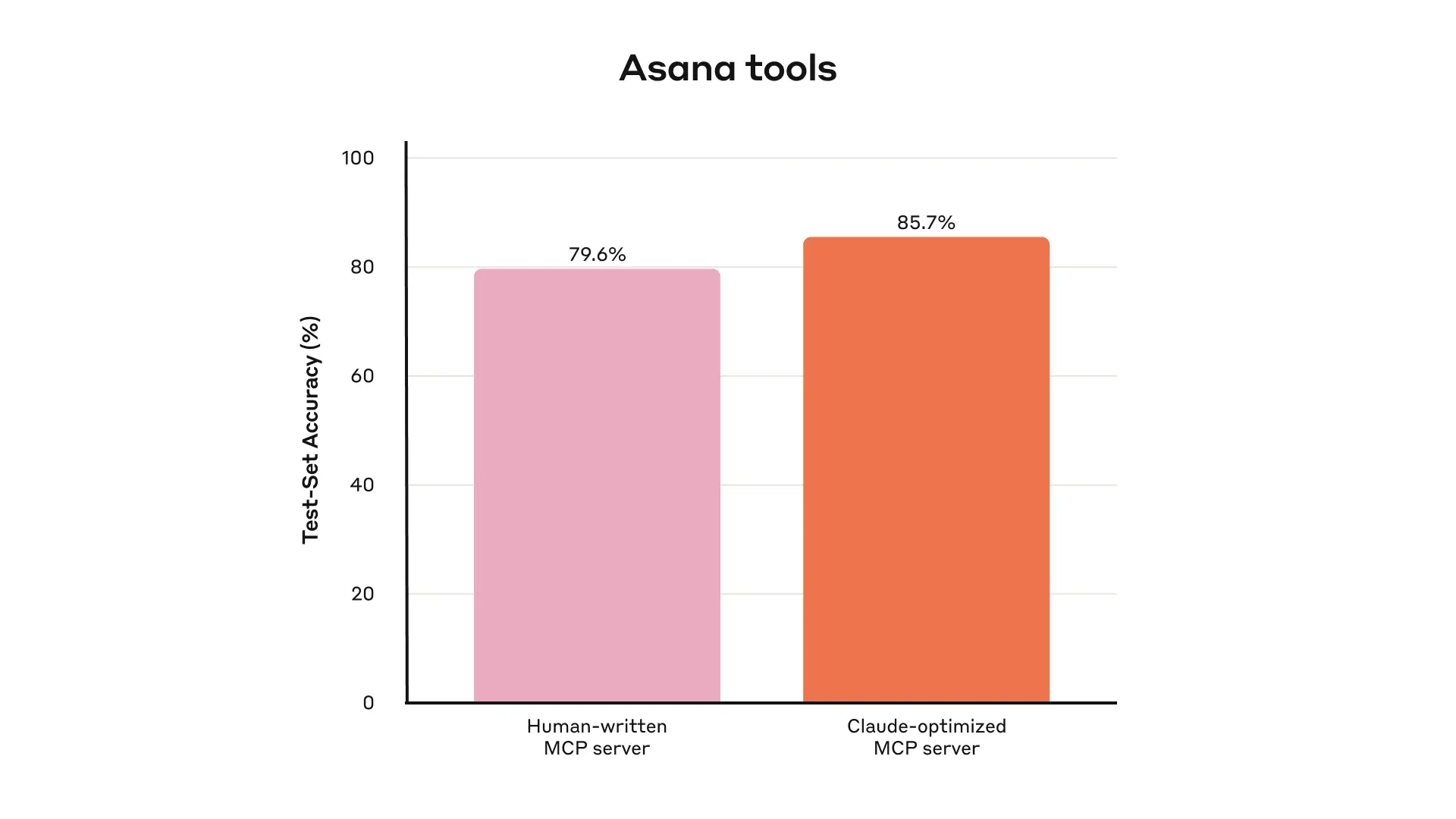

Held-out test set performance of our internal Asana tools 我们内部 Asana 工具的保留测试集表现

Analyzing results 分析结果

Agents are your helpful partners in spotting issues and providing feedback on everything from contradictory tool descriptions to inefficient tool implementations and confusing tool schemas. However, keep in mind that what agents omit in their feedback and responses can often be more important than what they include. LLMs don’t always say what they mean.

智能体是你发现问题和提供反馈的得力伙伴,他们可以帮助你识别从工具描述矛盾、工具实现低效到工具架构混乱等各种问题。不过,请记住,智能体在反馈和回应中遗漏的内容往往比他们提及的内容更为重要。大语言模型并不总是表达它们真正的意思。

Observe where your agents get stumped or confused. Read through your evaluation agents’ reasoning and feedback (or CoT) to identify rough edges. Review the raw transcripts (including tool calls and tool responses) to catch any behavior not explicitly described in the agent’s CoT. Read between the lines; remember that your evaluation agents don’t necessarily know the correct answers and strategies.

观察你的智能体在何处陷入困惑或卡住。仔细阅读你的评估智能体的推理和反馈(或思维链),以发现存在的问题。审查原始对话记录(包括工具调用和工具响应),以捕捉任何未在智能体思维链中明确描述的行为。要善于从字里行间发现问题;记住你的评估智能体并不一定知道正确答案和策略。

Analyze your tool calling metrics. Lots of redundant tool calls might suggest some rightsizing of pagination or token limit parameters is warranted; lots of tool errors for invalid parameters might suggest tools could use clearer descriptions or better examples. When we launched Claude’s web search tool, we identified that Claude was needlessly appending 2025 to the tool’s query parameter, biasing search results and degrading performance (we steered Claude in the right direction by improving the tool description).

分析你的工具调用指标。大量冗余的工具调用可能表明需要适当调整分页或令牌限制参数;大量因参数无效导致的工具错误可能说明工具需要更清晰的描述或更好的示例。当我们推出 Claude 的网页搜索工具时,我们发现 Claude 不必要地在工具的参数后附加 2025 ,这会影响搜索结果并降低性能(我们通过改进工具描述,引导 Claude 朝正确方向发展)。

与智能体协作

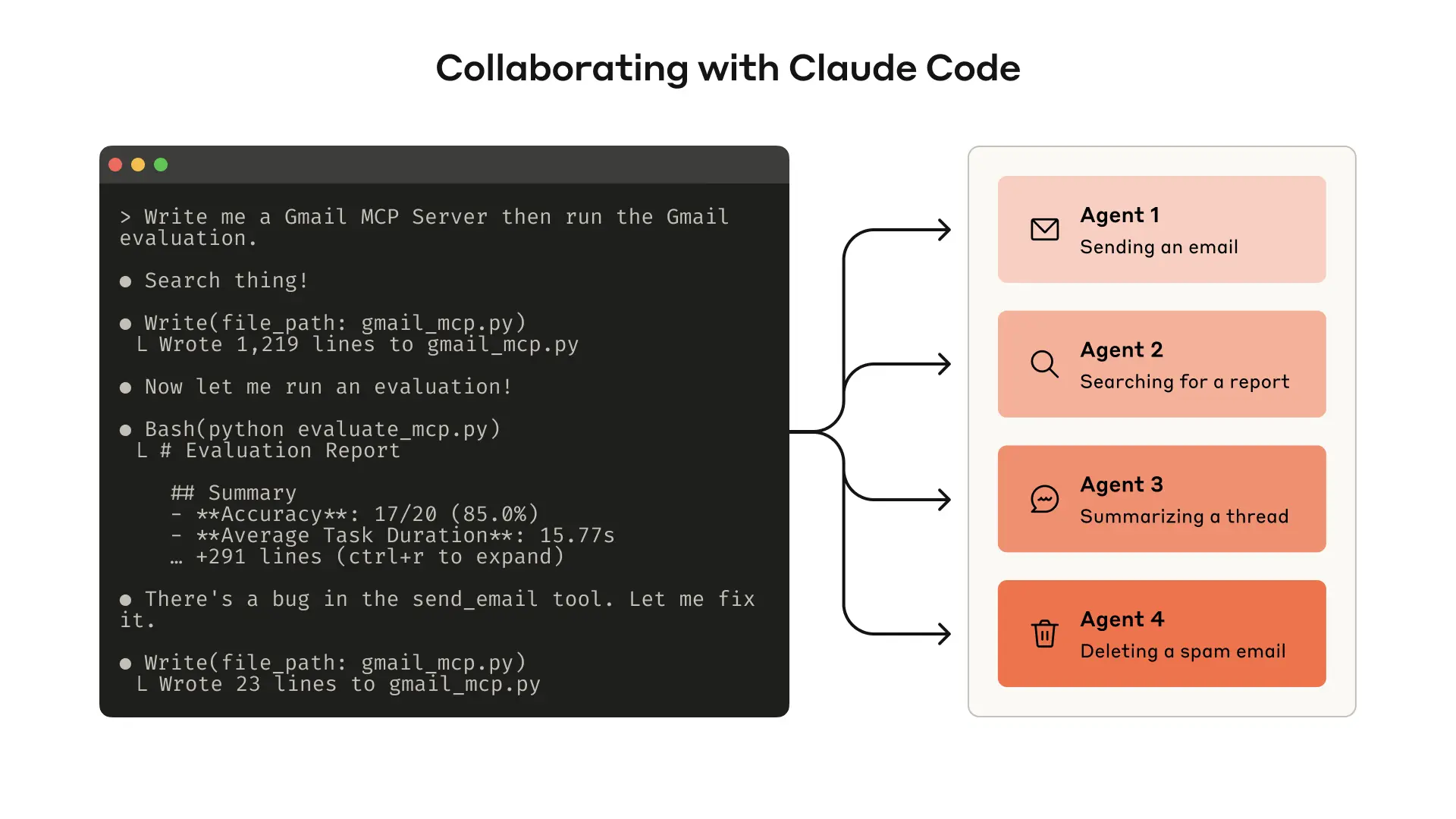

You can even let agents analyze your results and improve your tools for you. Simply concatenate the transcripts from your evaluation agents and paste them into Claude Code. Claude is an expert at analyzing transcripts and refactoring lots of tools all at once—for example, to ensure tool implementations and descriptions remain self-consistent when new changes are made.

你甚至可以让智能体分析你的结果,并为你改进工具。只需将你的评估智能体的对话记录拼接在一起,然后粘贴到 Claude Code 中。Claude 非常擅长分析对话记录,并一次性重构大量工具——例如,确保工具实现和描述在有新变更时依然保持自洽。

In fact, most of the advice in this post came from repeatedly optimizing our internal tool implementations with Claude Code. Our evaluations were created on top of our internal workspace, mirroring the complexity of our internal workflows, including real projects, documents, and messages.

事实上,这篇文章中的大部分建议都来自我们反复用 Claude Code 优化内部工具实现的过程。我们的评估是在内部工作区基础上创建的,反映了我们内部工作流程的复杂性,包括真实项目、文档和消息。

We relied on held-out test sets to ensure we did not overfit to our “training” evaluations. These test sets revealed that we could extract additional performance improvements even beyond what we achieved with “expert” tool implementations—whether those tools were manually written by our researchers or generated by Claude itself.

我们依靠保留的测试集来确保我们不会对我们的“训练”评估过拟合。这些测试集显示,即使在我们通过“专家”工具实现所取得的成果之上,我们仍然可以进一步提升性能——无论这些工具是由我们的研究人员手动编写,还是由 Claude 自动生成。

In the next section, we’ll share some of what we learned from this process.

在下一节中,我们将分享一些从这个过程中学到的经验。

编写高效工具的原则

In this section, we distill our learnings into a few guiding principles for writing effective tools.

在本节中,我们将把我们的经验总结为编写高效工具的几个指导原则。

为智能体选择合适的工具

More tools don’t always lead to better outcomes. A common error we’ve observed is tools that merely wrap existing software functionality or API endpoints—whether or not the tools are appropriate for agents. This is because agents have distinct “affordances” to traditional software—that is, they have different ways of perceiving the potential actions they can take with those tools

更多的工具并不总是带来更好的结果。我们常见的一个错误是工具仅仅包装了现有的软件功能或 API 端点——无论这些工具是否适合智能体。这是因为智能体与传统软件有着不同的“可供性”——也就是说,它们在使用这些工具时,感知和采取潜在行动的方式是不同的。

LLM agents have limited “context” (that is, there are limits to how much information they can process at once), whereas computer memory is cheap and abundant. Consider the task of searching for a contact in an address book. Traditional software programs can efficiently store and process a list of contacts one at a time, checking each one before moving on.

LLM 智能体的“上下文”有限(也就是说,它们一次能处理的信息量是有限的),而计算机内存却便宜且充足。以在通讯录中搜索联系人为例,传统软件程序可以高效地一次存储和处理一个联系人,在检查完一个后再继续下一个。

However, if an LLM agent uses a tool that returns ALL contacts and then has to read through each one token-by-token, it’s wasting its limited context space on irrelevant information (imagine searching for a contact in your address book by reading each page from top-to-bottom—that is, via brute-force search). The better and more natural approach (for agents and humans alike) is to skip to the relevant page first (perhaps finding it alphabetically).

然而,如果一个 LLM 代理使用一个返回所有联系人信息的工具,然后不得不逐个令牌地阅读每一个联系人,它就会在无关的信息上浪费其有限的上下文空间(想象一下你在通讯录中查找联系人时,从头到尾逐页阅读——也就是用蛮力搜索)。更好、更自然的方法(无论是对代理还是人类)是先跳到相关的页面(比如按字母顺序查找)。

We recommend building a few thoughtful tools targeting specific high-impact workflows, which match your evaluation tasks and scaling up from there. In the address book case, you might choose to implement a search_contacts or message_contact tool instead of a list_contacts tool.

我们建议构建一些针对特定高影响力工作流程的精心设计的工具,这些工具应与您的评估任务相匹配,并在此基础上逐步扩展。在通讯录的例子中,你可能会选择实现 search_contacts 或 message_contact 工具,而不是 list_contacts 工具。

Tools can consolidate functionality, handling potentially multiple discrete operations (or API calls) under the hood. For example, tools can enrich tool responses with related metadata or handle frequently chained, multi-step tasks in a single tool call.

工具可以整合功能,在底层处理多个独立操作(或 API 调用)。例如,工具可以通过相关元数据丰富工具响应,或者在一次工具调用中处理经常需要串联的多步任务。

Here are some examples:以下是一些示例:

- Instead of implementing a

list_users,list_events, andcreate_eventtools, consider implementing aschedule_eventtool which finds availability and schedules an event.

与其分别实现list_users、list_events和create_event工具,不如实现一个schedule_event工具,用于查找可用时间并安排活动。 - Instead of implementing a

read_logstool, consider implementing asearch_logstool which only returns relevant log lines and some surrounding context.

与其实现read_logs工具,不如实现一个search_logs工具,只返回相关的日志行及其周边的上下文。 - Instead of implementing

get_customer_by_id,list_transactions, andlist_notestools, implement aget_customer_contexttool which compiles all of a customer’s recent & relevant information all at once.

与其实现get_customer_by_id、list_transactions和list_notes工具,不如实现一个get_customer_context工具,一次性汇总客户所有最新且相关的信息。

Make sure each tool you build has a clear, distinct purpose. Tools should enable agents to subdivide and solve tasks in much the same way that a human would, given access to the same underlying resources, and simultaneously reduce the context that would have otherwise been consumed by intermediate outputs.

确保你构建的每个工具都有明确且独特的用途。工具应当让智能体能够像人类一样,将任务细分并解决问题,前提是拥有同样的底层资源,同时减少因中间输出而消耗的上下文。

Too many tools or overlapping tools can also distract agents from pursuing efficient strategies. Careful, selective planning of the tools you build (or don’t build) can really pay off.

工具过多或功能重叠也会分散智能体对高效策略的追求。对你构建(或不构建)工具进行谨慎、选择性的规划,确实会带来回报。

为你的工具命名空间

你的 AI 代理可能会访问数十个 MCP 服务器和数百种不同的工具——其中包括其他开发者提供的工具。当工具在功能上有重叠或用途不明确时,代理可能会对该使用哪一个工具感到困惑。

命名空间(将相关工具归为共同前缀下的分组)有助于在众多工具之间划定界限;MCP 客户端有时会默认这样做。例如,按服务(如 asana_search 、 jira_search )和按资源(如 asana_projects_search 、 asana_users_search )进行工具命名空间划分,可以帮助代理在合适的时间选择合适的工具。

我们发现,选择前缀式或后缀式命名空间会对工具使用评估产生非微小影响。具体效果因 LLM 而异,我们建议你根据自己的评估选择命名方案。

代理可能会调用错误的工具,或者用错误的参数调用正确的工具,调用的工具数量不足,或者错误地处理工具的响应。通过有选择地实现那些名称反映任务自然划分的工具,你可以同时减少加载到代理上下文中的工具数量和工具描述,并将代理计算从代理的上下文转移回工具调用本身。这降低了代理整体犯错的风险。

让你的工具返回有意义的上下文

In the same vein, tool implementations should take care to return only high signal information back to agents. They should prioritize contextual relevance over flexibility, and eschew low-level technical identifiers (for example: uuid, 256px_image_url, mime_type). Fields like name, image_url, and file_type are much more likely to directly inform agents’ downstream actions and responses.

同样,工具的实现应注意只向代理返回高价值的信息。它们应优先考虑上下文相关性而非灵活性,并避免使用底层技术标识符(例如: uuid 、 256px_image_url 、 mime_type )。像 name 、 image_url 和 file_type 这样的字段更有可能直接影响代理后续的行为和响应。

Agents also tend to grapple with natural language names, terms, or identifiers significantly more successfully than they do with cryptic identifiers. We’ve found that merely resolving arbitrary alphanumeric UUIDs to more semantically meaningful and interpretable language (or even a 0-indexed ID scheme) significantly improves Claude’s precision in retrieval tasks by reducing hallucinations.

智能体在处理自然语言名称、术语或标识符时,往往比处理晦涩难懂的标识符要成功得多。我们的研究发现,仅仅将任意的字母数字 UUID 解析为更具语义意义和可解释性的语言(甚至是 0 索引的 ID 方案),就能显著提升 Claude 在检索任务中的精确度,减少幻觉现象。

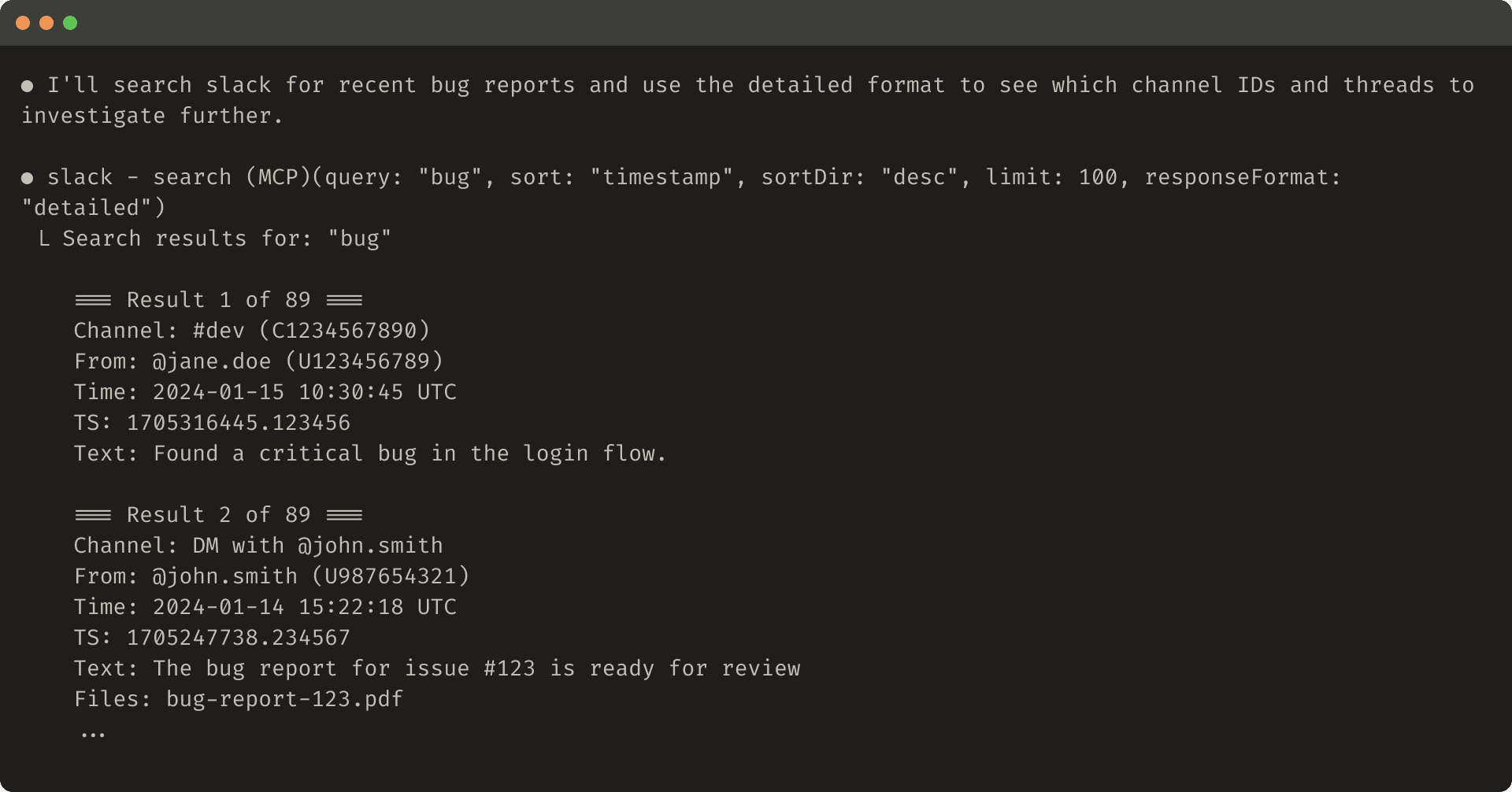

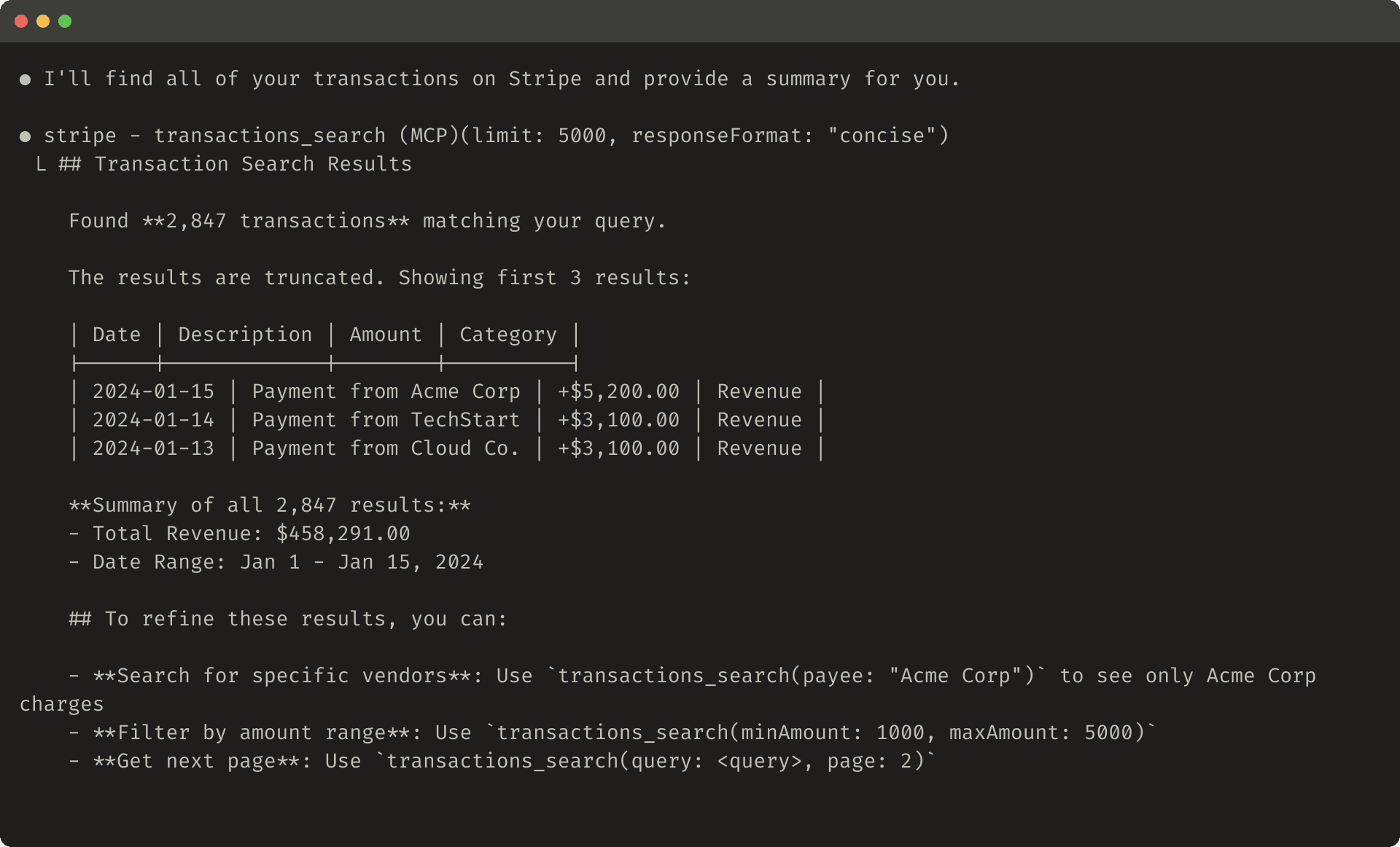

In some instances, agents may require the flexibility to interact with both natural language and technical identifiers outputs, if only to trigger downstream tool calls (for example, search_user(name=’jane’) → send_message(id=12345)). You can enable both by exposing a simple response_format enum parameter in your tool, allowing your agent to control whether tools return “concise” or “detailed” responses (images below).

在某些情况下,智能体可能需要灵活地同时与自然语言和技术标识符输出进行交互,即便只是为了触发下游工具调用(例如, search_user(name=’jane’) → send_message(id=12345) )。你可以通过在工具中暴露一个简单的 response_format 枚举参数来实现两者兼容,让你的智能体能够控制工具返回 “concise” 还是 “detailed” 响应(如下图所示)。

You can add more formats for even greater flexibility, similar to GraphQL where you can choose exactly which pieces of information you want to receive. Here is an example ResponseFormat enum to control tool response verbosity:

你还可以添加更多格式,以获得更大的灵活性,类似于 GraphQL,你可以精确选择想要接收的信息内容。以下是一个用于控制工具响应详细程度的 ResponseFormat 枚举示例:

enum ResponseFormat {

DETAILED = "detailed",

CONCISE = "concise"

}

Here’s an example of a detailed tool response (206 tokens):

以下是一个详细工具响应的示例(206 个 token):

This code snippet depicts an example of a detailed tool response.

Here’s an example of a concise tool response (72 tokens):

以下是一个简洁工具响应的示例(72 个 token):

Even your tool response structure—for example XML, JSON, or Markdown—can have an impact on evaluation performance: there is no one-size-fits-all solution. This is because LLMs are trained on next-token prediction and tend to perform better with formats that match their training data. The optimal response structure will vary widely by task and agent. We encourage you to select the best response structure based on your own evaluation.

即使是你的工具响应结构——例如 XML、JSON 或 Markdown——也会影响评估表现:没有一种通用的最佳方案。这是因为大语言模型(LLM)是基于下一个 token 预测进行训练的,通常在与其训练数据格式相匹配的结构下表现更好。最优的响应结构会因任务和代理而有很大差异。我们建议你根据自己的评估选择最佳的响应结构。

优化工具响应以提高令牌效率

优化上下文的质量很重要,但优化工具响应中返回给代理的上下文数量也同样重要。

我们建议为可能会消耗大量上下文的任何工具响应,实施分页、范围选择、过滤和/或截断的组合,并设置合理的默认参数值。对于 Claude Code,我们默认将工具响应限制为 25,000 个 token。我们预计代理的有效上下文长度会随着时间增长,但对高效利用上下文的工具的需求将持续存在。

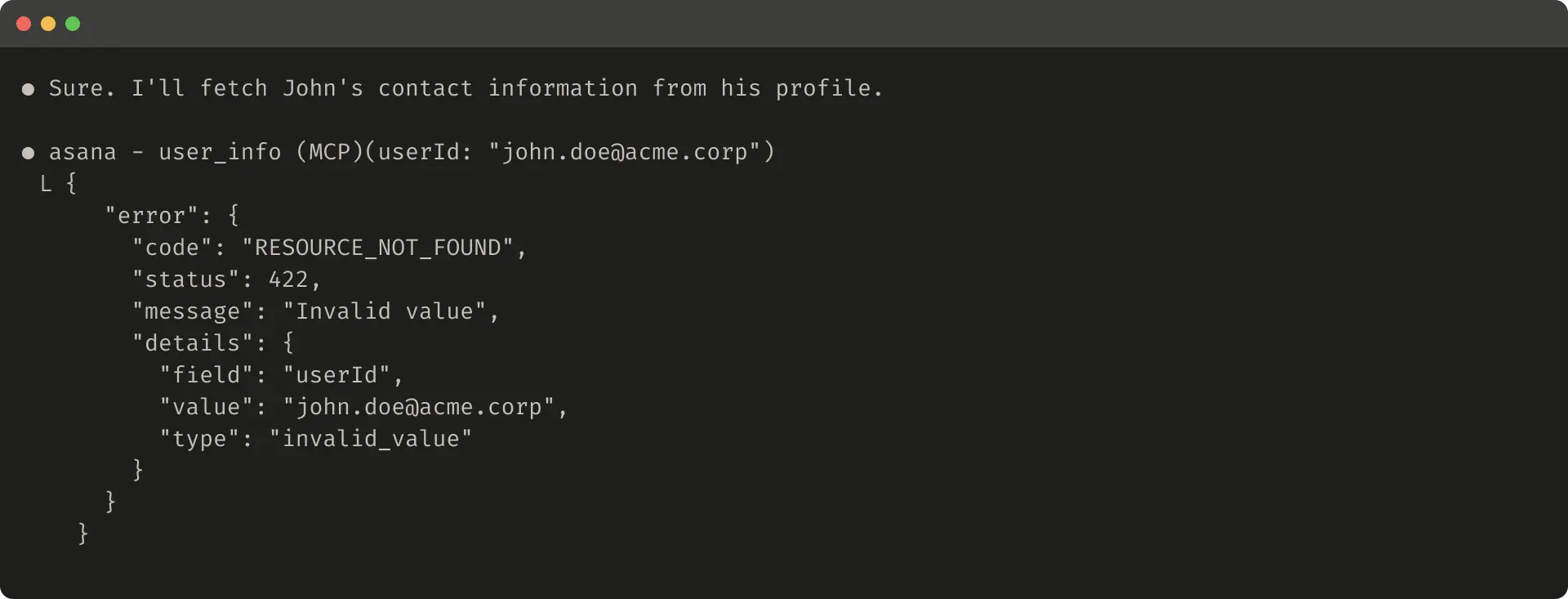

如果你选择截断响应,请务必通过有用的指令引导代理。你可以直接鼓励代理采用更节省 token 的策略,比如在知识检索任务中进行多次小型且有针对性的搜索,而不是一次性进行广泛搜索。同样,如果工具调用出现错误(例如在输入验证期间),你可以通过提示工程优化错误响应,明确传达具体且可操作的改进建议,而不是模糊的错误代码或回溯信息。

Here’s an example of a truncated tool response:

以下是一个截断工具响应的示例:

This image depicts an example of a truncated tool response.

Here’s an example of an unhelpful error response:

以下是一个无用的错误响应示例:

This image depicts an example of an unhelpful tool response.

Here’s an example of a helpful error response:

以下是一个有用的错误响应示例:

为你的工具描述进行提示工程

现在我们来说说提升工具效果最有效的方法之一:为你的工具描述和规范进行提示工程。由于这些内容会被加载到智能体的上下文中,它们可以共同引导智能体采取更有效的工具调用行为。

在编写工具描述和规范时,想象一下你会如何向团队中新入职的成员介绍你的工具。考虑你可能会隐含带来的背景信息——如专用查询格式、专业术语的定义、底层资源之间的关系——并将这些信息明确表达出来。通过清晰描述(并通过严格的数据模型加以约束)预期的输入和输出,避免产生歧义。尤其是,输入参数的命名应当明确无误:不要使用名为 user 的参数,建议使用名为 user_id 的参数。

With your evaluation you can measure the impact of your prompt engineering with greater confidence. Even small refinements to tool descriptions can yield dramatic improvements. Claude Sonnet 3.5 achieved state-of-the-art performance on the SWE-bench Verified evaluation after we made precise refinements to tool descriptions, dramatically reducing error rates and improving task completion.

通过评估,您可以更有信心地衡量提示工程的影响。即使对工具描述进行微小的优化,也能带来显著的提升。在我们对工具描述进行了精准优化后,Claude Sonnet 3.5 在 SWE-bench Verified 评测中取得了业界领先的表现,大幅降低了错误率并提升了任务完成度。

You can find other best practices for tool definitions in our Developer Guide. If you’re building tools for Claude, we also recommend reading about how tools are dynamically loaded into Claude’s system prompt. Lastly, if you’re writing tools for an MCP server, tool annotations help disclose which tools require open-world access or make destructive changes.

您可以在我们的开发者指南中找到其他关于工具定义的最佳实践。如果您正在为 Claude 构建工具,我们还建议您了解工具如何动态加载到 Claude 的系统提示中。最后,如果您正在为 MCP 服务器编写工具,工具注释有助于说明哪些工具需要开放世界访问或会产生破坏性更改。

展望未来

为了为智能体构建高效的工具,我们需要将软件开发实践从可预测、确定性的模式转向非确定性的模式。

通过我们在本文中描述的迭代、以评估为驱动的流程,我们发现了让工具取得成功的规律:高效的工具需要有意且清晰的定义,合理利用代理的上下文信息,能够在多样化的工作流程中组合使用,并帮助代理直观地解决现实世界中的任务。

未来,我们预计代理与世界交互的具体机制将不断演变——从 MCP 协议的更新到底层 LLM 自身的升级。通过系统化、以评估为驱动的方法来改进代理工具,我们可以确保随着代理能力的提升,他们所使用的工具也能同步进化。